Hi guys,

I have a home made capacitive sensor I intend to use to measure the depth of water with.

Seems to work fine, but here is the issue.

I am *not* interested in the absolute value of the capaity in terms of uF or nF etc.

The test sensor is a foot long; when it is completely out of the water reservoir, I get a value of 10. This would be the capacity created by the cable, connector etc.

When its fully immersed, the value is 60.

Now, I need to output the reading on a scale of 1 to 100, as a percent. So, 10 would correspond to 1 (or 0?), and 60 to 100.

Therefore, is calculating the percent as simple as (Actual_Reading-Reading_Min)*100/(Reading_Max-Reading_Min)?

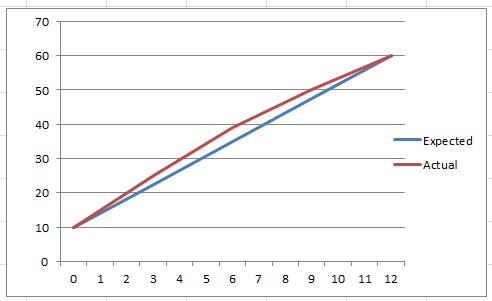

I plotted the actual readings taken at 0%, 25%, 50%, 75% and 100%, and the expected computed values, and there is a slight discrepency.

X axis is the inches of the probe under water, and y axis is the reading.

Is there a better way of scaling, as against the formula I've used, for a better fit?

Bookmarks